Executable loading and startup performance on macOS

Recently, I fixed a macOS-specific startup performance regression in Node.js after an extensive investigation. Along the way, I learned a lot about tools for macOS and Node.js compilation workflows that don’t seem to be particularly well documented on the Internet, so this is my attempt to put more useful information out there in the hope that it can help someone else doing something similar.

Spoiler & TL;DR: When compiling a large C++ program on macOS, make sure to build your statically linked libraries with proper symbol visibility settings e.g. explicitly marking all the public symbols as visible and compiling the code using -fvisibility=hidden. Otherwise, you might end up with unexpected weak symbol fixups from C++ template instantiations happening during runtime, leading to a startup performance overhead.

A mysterious regression

The story began with a startup performance regression noticed by RafaelGSS, which was described in this issue: between Node.js 20 and 22, the time taken to start Node.js and run an empty script on a typical macOS machine rose from ~19ms to ~27ms, as measured by hyperfine. By 23 it climbed up to ~30ms. Curiously, the regression appeared to be specific to macOS, and didn’t reproduce on Linux.

Now, a 10ms delay for starting up a process arguably isn’t that much, especially when it’s only reproducible on macOS where machines tend to be very beefy. But as the overhead was climbing, it seemed worthwhile to at least find out what’s going on and contain it somehow.

1. Bisecting Node.js nightly builds

This part is very specific to the release/version management of the Node.js project. If you are not particularly interested in it, feel free to skip to section 2.

The regression appeared to have happened quite some time ago (v21 was released in October 2023, v22 in April 2024, and we only noticed this macOS-only regression in October 2024). It would take quite some time to bisect if we built directly from commits during this timespan. Fortunately, Node.js maintains nightly builds, which are available here. So the first thing I did was to bisect the regression using nightly builds to see if there was a suspicious jump in a date - indeed, there was a jump on the date where the V8 11.3 -> 11.8 upgrade landed (Node.js doesn’t always upgrade V8 incrementally, when build issues build up, the upgrade can accumulate into a bigger version jump, and in this case it jumped for 5 V8 releases). I built the commits before and after the V8 upgrade and confirmed that the 10ms regression happened during the upgrade.

1.1 Managing the Node.js nightly builds locally

Personally I use nvs to manage my nightly builds locally. For example, to install the nightly produced on 2023-10-14, I’d do

-

look up the filename with

20231014from the listing in https://nodejs.org/download/nightly/ (surely there is a clever way to save this step, but this dumb method works for me) -

Run this

1

2

3

4# The nightly remote must be added first

# nvs remote add nightly https://nodejs.org/download/nightly/

nvs add nightly/21.0.0-nightly20231014d1ef6aa2db

nvs use nightly/21.0.0-nightly20231014d1ef6aa2db

1.2 Managing Node.js v8-canary builds locally

The V8 11.3 -> 11.8 upgrade was a massive change (+245,743 −136,195), so obviously I needed something more granular to look at. Node.js also maintains a special release channel called v8-canary, which contains daily builds of Node.js’s main branch combined with V8’s main branch using Node.js’s own build configuration. For example, to install the build produced on 20230712 using nvs, I’d do something like this:

-

look up the filename with

20230712from the listing in https://nodejs.org/download/v8-canary/ (again, it’s dumb but it works) -

Run this

1

2

3

4

5# The canary remote must be added first

# nvs remote canary https://nodejs.org/download/v8-canary/

# Install the build from 2023-07-12, with file name looked up from the URL above

nvs add canary/21.0.0-v8-canary20230712b8a5b2645b

nvs use canary/21.0.0-v8-canary20230712b8a5b2645b

Unlike the Node.js nightly builds, the Node.js v8-canary builds are not always available for all platforms every day. It is built with Node.js’s own GYP build configurations, while the V8 source code gets automatically, and, from time to time, the GYP configurations get broken by changes in the V8 upstream (V8 itself also tests Node.js integration, but with a GN build, so the two are not in sync) - that’s precisely why the v8-canary build was set up - to catch these breakages early and fix them before the proper V8 upgrade in the Node.js main branch. Meanwhile, during the days when the build is being fixed, the v8-canary builds wouldn’t be available for download.

With limited pre-built binaries available, I couldn’t pinpoint a small enough V8 change range that seemed particularly suspicious. It was not impossible to build and bisect V8 commits combined with the Node.js main branch locally, but that could involve solving numerous interim build errors that would not be needed anywhere else, so I was not particularly keen on doing it. The only valid conclusion I could draw from available builds was still that the startup performance was getting worse over time and it was likely coming from somewhere in the V8 11.3 -> 11.8 changes.

2. Narrowing down the startup path with the regression

With the V8 upgrade identified as the likely source of the regression, the next step was to pinpoint where in the startup process the overhead was introduced. To do this, I started modifying the startup code locally to isolate the problematic sections.

I began by simplifying the startup code incrementally:

- Running an empty script (

node empty.js), which involves the full bootstrap and module loading. - Just printing the version string (node --version), which only parses command-line arguments and outputs the Node.js version without doing the full bootstrap.

- Commenting out the entire main function and having it return 0 immediately.

I ended up reproducing the regression with the main function modified to be a no-op.

1 | diff --git a/src/node_main.cc b/src/node_main.cc |

1 | 0522ac08 was the commit before the V8 upgrade, 86cb23d0 was the commit after the V8 |

Apparently, even with an empty main function, the startup still regressed for ~10ms during the V8 upgrade, so the problematic bits happened somewhere outside of the main function.

3. Checking dynamic libraries

With the regression confirmed to be outside the main function, my next hypothesis was that it might be related to the loading of dynamic libraries.

To inspect what’s dynamically loaded by an executable on macOS, I tried dyld_info:

1 | $ dyld_info -dependents ./node-86cb23d0 |

(I later found out that DYLD_PRINT_LIBRARIES=1 could also be used to log what’s actually dynamically loaded, though in this case it would’ve still yielded the same output for the two executables, because the loading dynamic libraries was unchanged during the regression and it wasn’t where the problem lied).

No matter how I looked, it seemed that the two different versions loaded identical dynamic libraries in the same way. I also tried to review changes in the build files during the V8 upgrade, but could not find anything that would matter for dynamic library loading, either.

4. Profiling/tracing startup code (esp. static initialization)

Since the loading of the dynamic libraries seemed to be irrelevant, the next suspect would be static initializers. Node.js is a big code base with various static initializers scattered around (some from itself, but many from its dependencies), and the regression happened in a huge diff, it would be quite challenging to figure out what changed in the static initialization by just looking directly at the diff, so I turned to profilers for help.

I didn’t know much about profiling on macOS, and normally I’d turn to Linux perf when trying to profile native code, but this regression happened specifically on macOS and didn’t reproduce on Linux, so it was also a good opportunity for me to learn how to do profiling/tracing properly on macOS.

4.1 Instruments

The most promising macOS profiling tool I found on the Internet was Instruments, but it was somewhat difficult to use the GUI to record a program that only runs for ~20ms. With a bit more digging I found that the trace can be captured using the command line (here out/Release/node does not need any arguments since I’ve already made the main function a no-op):

1 | $ xctrace record --output . --template "Time Profiler" --launch -- out/Release/node |

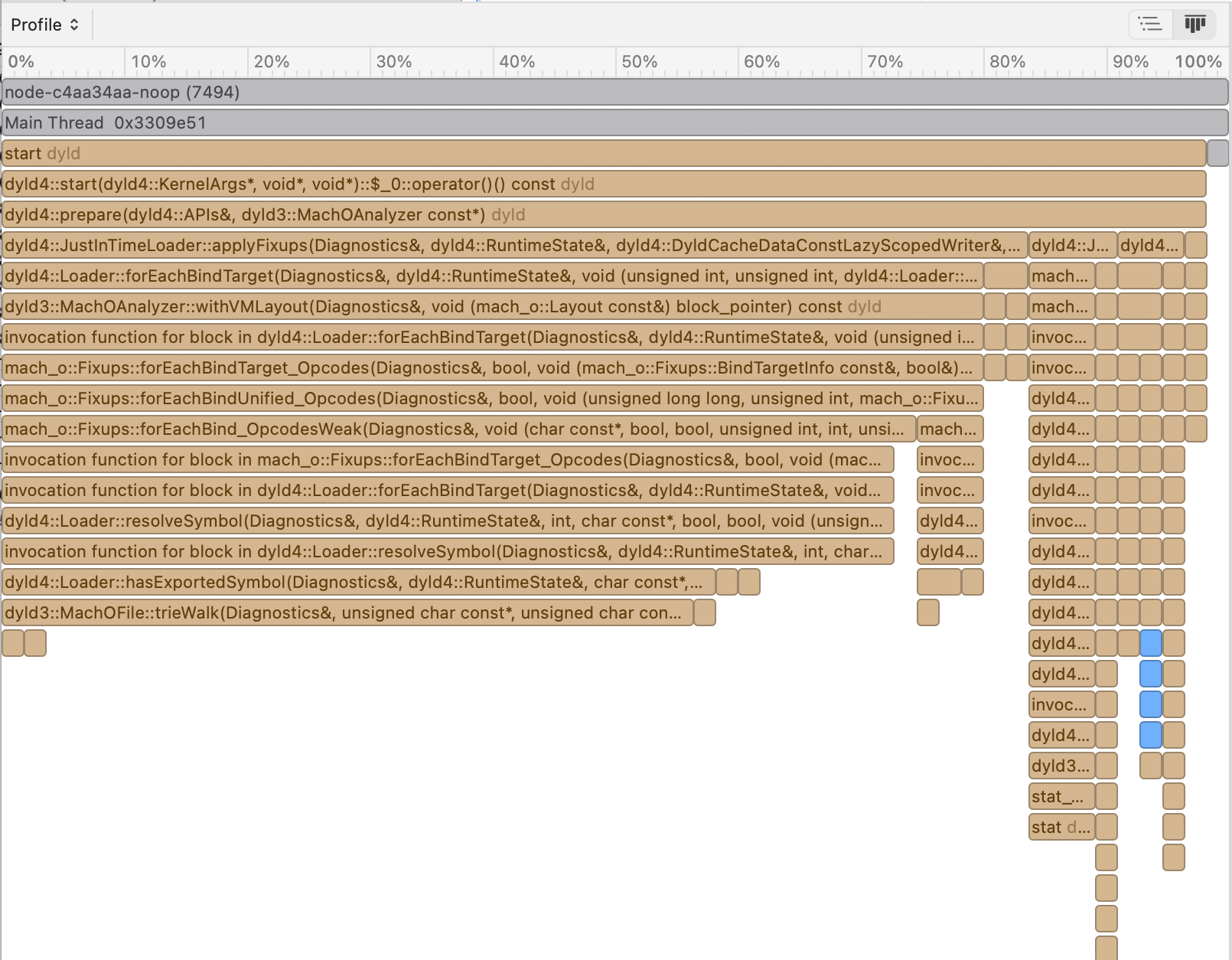

This produces a folder named something like Launch_node_2024-12-23_07.14.13_2B4EE915.trace, and open would load it using the Instruments app, which showed me something like this:

There was no outstanding static initialization in the profiles, and it seemed most time was spent on dyld’s fixups of the symbols, which spent a lot of time walking the symbol tries. This part was also outstanding in the build without the regression. As I was looking for the cause of a regression, when comparing the two profiles, it was difficult to get any clues out of the relative time spent - the absolute time in the profile taken for the regressed version even seemed lower than the one without regression, which weakened my confidence in the reliability of the profiles. I assumed the symbol fixups must come from the dynamic libraries, and as mentioned before, I couldn’t find any changes in the dynamic libraries in the two versions anyway. Considering the programs only ran for 20-30ms, which wouldn’t yield that many samples, I discarded the profiles as being too inaccurate to draw any conclusion from (spoiler alert: there was a clue in these profiles, just not sufficient to guide any fixes alone).

4.2 Samply

There is also an interesting sampling profiler that works on macOS called samply, which can display the profile using the Firefox profiler UI nicely. I tried it out with:

1 | samply record --rate 10000 out/Release/node |

Unfortunately, samply could not capture enough samples at all for a program that only ran for 20-30ms (sometimes it gets < 10 samples, sometimes it gets nothing). So once again it seemed a sampling profiler wasn’t the right choice for looking into this issue.

4.3 dtrace & dtruss

The last profiling/tracing tool I tried was dtrace. I used it a long time ago but generally tried to avoid it as it required the hassle of turning off System Integrity Protection (SIP). It is capable of both profiling as a sampling profiler and tracing system calls, so I hoped that it could give me a bit more insight into what might be done during static initialization.

To use dtrace on recent versions of macOS, it’s required to boot into recovery mode and turn off SIP. Then dtrace would be usable upon restart. When you are done using dtrace it’s also necessary to boot into recovery mode again and turn SIP back on or otherwise the system would be more vulnerable (now you see why I generally avoided this tool, because I am lazy :P).

I tried to perform sampling-based profiling with dtrace (this command tells dtrace to run out/Release/node and sample it every 200us when the CPU is executing code, dumping the stacks to node.stacks):

1 | sudo dtrace -n 'profile-5000 /execname == "node"/ { @[ustack()] = count(); }' -c "out/Release/node" -o node.stacks |

The highest sampling rate that I could set was 200us. Similar to samply, when run on the binary that has a no-op main function, this didn’t yield enough samples for me to draw any conclusions. So I tried tracing the system calls using dtruss (which is basically a wrapper of dtrace) to see if any static initialization code was doing expensive syscalls:

1 | sudo dtruss -a out/Release/node &> node.log |

From the output of dtruss, it seemed the two different executables were doing exactly the same syscalls during initialization. The only difference was that in the traces of the one with the regression, the mprotect call that loads the executable into memory started a lot later than the one without the regression. But since the two were doing identical system calls - including loading the same dynamic libraries - before that mprotect, I was at a dead end again. The dynamic libraries loaded were the same, there was no outstanding code execution in static initialization that could be sampled, nor outstanding syscalls performed by static initialization. What else could be happening during that phase?

5. Checking build configurations

Now I started to wonder if something was wrong in the build configuration that made loading the same set of dynamic libraries slower somehow. I remembered that V8 maintains a fork of Node.js to run integration tests in their CI. This fork is roughly in sync with the Node.js main branch but is built with a different build toolchain and configurations - V8’s fork uses GN (which is also Electron’s fork of Node.js) while the Node.js’s official build still uses GYP (the build system replaced by GN in Chromium). Maybe trying a completely different build configuration would shed some light?

So I tried building V8’s fork out of curiosity. It turned out to be a lot faster than the Node.js official builds!

1 | $ hyperfine "./node-main --version" |

The bad news was, though, that there were 4000+ build commands executed for building Node.js (mostly compiler commands, in particular clang commands on macOS) and they were all very long. It would be like looking for a needle in a haystack if I didn’t have a clue what I was looking for.

We did look into some high-level differences between the two builds, for example:

- V8’s fork is compiled using a version of clang+llvm that’s almost tip-of-tree. I tried building Node.js’s with GYP and the toolchain overridden to the ones used by V8’s fork, but it yielded no difference in performance.

- V8’s fork is compiled with a custom version of libc++ statically built-in. But then we were just looking for the cause of a regression, and that regression happened between two versions that are both built with dynamic linkage to the system libc++, so it wasn’t what we were looking for.

- V8’s fork uses lld (llvm’s linker) instead the macOS ld. I did try to build Node.js with lld using its GYP configs, but it yielded no difference. And again, the regression happened between two builds using identical toolchains, so the linker was not the factor that we should be looking at.

- At one point, insufficient link-time optimization seemed to be a plausible suspect, and @anonrig tried building Node.js with LTO enabled by default (it has been a configure-time option), after some trial and error, LTO turned out to make no difference in startup performance, either, even though it made the build time significantly longer and the executable bigger. Also V8’s build did not actually enable LTO anyway, so it wasn’t LTO.

- There were also some theories that the executable size might have made a difference. We tried building Node.js without ICU which would make the executable ~30MB smaller by not bundling in full ICU data - yet the executable was still not faster to start up.

We were running out of plausible suspects at this point.

6. Checking symbol fixups

To find more leads, I ended up researching all the steps macOS does when loading an executable. The source code of the dynamic linker on macOS (dyld4) could be found online, but it would be much easier to check out some high-level overview instead. Then I found this very useful video that shared a lot of insights and tips - in particular, the DYLD_* environment variables one can use to log the loading steps (documentation is in man dyld).

Previously, I found several web pages mentioning DYLD_PRINT_STATISTICS and gave it a try - unfortunately it seemed to be gone in newer versions of dyld/macOS, so I didn’t look into any other variables, assuming that they were obsolete too. After watching the video I tried other DYLD_* environment variables I found in man dyld, and it seems plenty of them were still working. In particular, I tried DYLD_PRINT_BINDINGS=1, and scrolled through the outputs, which revealed that there were a lot more symbols being fixed up in the executable with the regression. After giving it a closer look, I noticed that many of them appeared to be weak symbols:

1 | DYLD_PRINT_BINDINGS=1 ./node-c4aa34aa --version 2>&1 | grep "looking for weak-def symbol" | wc -l |

I used this script to filter them out and demangle them:

1 |

|

1 | bash symbols.sh ~/projects/node/out/Release/node node-main |

To my surprise, a lot of the weak symbols that were bound dynamically came from V8, which is statically linked into Node.js. I was puzzled - weren’t these symbols supposed to be resolved and bound at link time already?

I looked through the symbols to find more patterns, and one group stood out. They looked like this:

1 | ... |

And there were quite a lot of them being resolved at runtime:

1 | grep StaticCallInterfaceDescriptor | wc -l |

These are C++ template instantiations from C++ source files generated by V8’s torque DSL (in a ninja build, the generated file would be ./out/Release/gen/torque-generated/interface-descriptors.inc). I remembered that the video happened to mention that weak symbols may come from C++ template instantiations. It seemed somewhat counterintuitive to me - shouldn’t C++ templates be resolved and bound at link time? If not, how could I make sure that they are?

…wait a minute, why are these symbols even showing up in the executable? They are all internal and aren’t supposed to be visible once the executable is built! There must be something wrong with the build commands.

7. Checking build commands

This time, I knew specifically which commands out of those 4000+ to look at - the command used to build interface-descriptors.inc and the commands used to link it into the final build (and luckily, the target already turned out to be in the first command).

To dump all the build commands, I ran this from the Node.js build directory (personally I use ninja for local development as it gives me a nice progress bar, it’s unofficial and is not what Node.js uses to produce releases, but the builds aren’t too different from the make builds).

1 | ninja -n --verbose -C out/Release node > build-commands.txt |

The command used to build interface-descriptors.inc, in particular, looks like this:

1 | [2986/4164] ccache clang++ -MMD -MF obj/deps/v8/src/codegen/v8_base_without_compiler.interface-descriptors.o.d -D_GLIBCXX_USE_CXX11_ABI=1 -DNODE_OPENSSL_CONF_NAME=nodejs_conf -DNODE_OPENSSL_HAS_QUIC -DICU_NO_USER_DATA_OVERRIDE -DV8_GYP_BUILD -DV8_TYPED_ARRAY_MAX_SIZE_IN_HEAP=64 -D_DARWIN_USE_64_BIT_INODE=1 -DOPENSSL_NO_PINSHARED -DOPENSSL_THREADS -DV8_TARGET_ARCH_ARM64 -DV8_HAVE_TARGET_OS -DV8_TARGET_OS_MACOS '-DV8_EMBEDDER_STRING="-node.11"' -DENABLE_DISASSEMBLER -DV8_PROMISE_INTERNAL_FIELD_COUNT=1 -DOBJECT_PRINT -DV8_INTL_SUPPORT -DV8_ATOMIC_OBJECT_FIELD_WRITES -DV8_ENABLE_LAZY_SOURCE_POSITIONS -DV8_USE_SIPHASH -DV8_SHARED_RO_HEAP -DNDEBUG -DV8_WIN64_UNWINDING_INFO -DV8_ENABLE_REGEXP_INTERPRETER_THREADED_DISPATCH -DV8_USE_ZLIB -DV8_ENABLE_SPARKPLUG -DV8_ENABLE_MAGLEV -DV8_ENABLE_TURBOFAN -DV8_ENABLE_SYSTEM_INSTRUMENTATION -DV8_ENABLE_WEBASSEMBLY -DV8_ENABLE_JAVASCRIPT_PROMISE_HOOKS -DV8_ENABLE_CONTINUATION_PRESERVED_EMBEDDER_DATA -DV8_ALLOCATION_FOLDING -DV8_ALLOCATION_SITE_TRACKING -DV8_ADVANCED_BIGINT_ALGORITHMS -DICU_UTIL_DATA_IMPL=ICU_UTIL_DATA_STATIC -DUCONFIG_NO_SERVICE=1 -DU_ENABLE_DYLOAD=0 -DU_STATIC_IMPLEMENTATION=1 -DU_HAVE_STD_STRING=1 -DUCONFIG_NO_BREAK_ITERATION=0 -I../../deps/v8 -I../../deps/v8/include -Igen/inspector-generated-output-root -I../../deps/v8/third_party/inspector_protocol -Igen -Igen/generate-bytecode-output-root -I../../deps/icu-small/source/i18n -I../../deps/icu-small/source/common -I../../deps/v8/third_party/zlib -I../../deps/v8/third_party/zlib/google -I../../deps/v8/third_party/abseil-cpp -I../../deps/v8/third_party/fp16/src/include -O3 -gdwarf-2 -fno-strict-aliasing -mmacosx-version-min=11.0 -arch arm64 -Wall -Wendif-labels -W -Wno-unused-parameter -std=gnu++20 -stdlib=libc++ -fno-rtti -fno-exceptions -Wno-invalid-offsetof -c ../../deps/v8/src/codegen/interface-descriptors.cc -o obj/deps/v8/src/codegen/v8_base_without_compiler.interface-descriptors.o |

Comparing this against the build command of V8’s fork:

1 | [3021/3853] ../../third_party/llvm-build/Release+Asserts/bin/clang++ -MMD -MF obj/v8/v8_base_without_compiler/interface-descriptors.o.d -D__STDC_CONSTANT_MACROS -D__STDC_FORMAT_MACROS -D_FORTIFY_SOURCE=2 -D__ARM_NEON__=1 -DCR_XCODE_VERSION=1610 -DCR_CLANG_REVISION=\"llvmorg-20-init-13894-g8cb44859-1\" -D_LIBCPP_HARDENING_MODE=_LIBCPP_HARDENING_MODE_EXTENSIVE -D_LIBCPP_DISABLE_VISIBILITY_ANNOTATIONS -D_LIBCXXABI_DISABLE_VISIBILITY_ANNOTATIONS -D_LIBCPP_INSTRUMENTED_WITH_ASAN=0 -DCR_LIBCXX_REVISION=9d470adcffe6740acbf0597e6cfe8c09178610b1 -DTMP_REBUILD_HACK -DNDEBUG -DNVALGRIND -DDYNAMIC_ANNOTATIONS_ENABLED=0 -DV8_TYPED_ARRAY_MAX_SIZE_IN_HEAP=64 -DV8_EMBEDDER_STRING=\"-node.0\" -DV8_INTL_SUPPORT -DV8_ATOMIC_OBJECT_FIELD_WRITES -DV8_ENABLE_LAZY_SOURCE_POSITIONS -DV8_WIN64_UNWINDING_INFO -DV8_ENABLE_REGEXP_INTERPRETER_THREADED_DISPATCH -DV8_SHORT_BUILTIN_CALLS -DV8_EXTERNAL_CODE_SPACE -DV8_ENABLE_SPARKPLUG -DV8_ENABLE_MAGLEV -DV8_ENABLE_TURBOFAN -DV8_ENABLE_SYSTEM_INSTRUMENTATION -DV8_ENABLE_WEBASSEMBLY -DV8_ENABLE_JAVASCRIPT_PROMISE_HOOKS -DV8_ENABLE_CONTINUATION_PRESERVED_EMBEDDER_DATA -DV8_ALLOCATION_FOLDING -DV8_ALLOCATION_SITE_TRACKING -DV8_SCRIPTORMODULE_LEGACY_LIFETIME -DV8_ADVANCED_BIGINT_ALGORITHMS -DV8_STATIC_ROOTS -DV8_USE_ZLIB -DV8_USE_LIBM_TRIG_FUNCTIONS -DV8_ENABLE_MAGLEV_GRAPH_PRINTER -DV8_ENABLE_EXTENSIBLE_RO_SNAPSHOT -DV8_ENABLE_BLACK_ALLOCATED_PAGES -DV8_WASM_RANDOM_FUZZERS -DV8_ARRAY_BUFFER_INTERNAL_FIELD_COUNT=2 -DV8_ARRAY_BUFFER_VIEW_INTERNAL_FIELD_COUNT=2 -DV8_PROMISE_INTERNAL_FIELD_COUNT=1 -DV8_COMPRESS_POINTERS -DV8_COMPRESS_POINTERS_IN_SHARED_CAGE -DV8_31BIT_SMIS_ON_64BIT_ARCH -DV8_DEPRECATION_WARNINGS -DV8_HAVE_TARGET_OS -DV8_TARGET_OS_MACOS -DCPPGC_CAGED_HEAP -DCPPGC_YOUNG_GENERATION -DCPPGC_POINTER_COMPRESSION -DCPPGC_ENABLE_LARGER_CAGE -DCPPGC_SLIM_WRITE_BARRIER -DV8_TARGET_ARCH_ARM64 -DV8_RUNTIME_CALL_STATS -DBUILDING_V8_SHARED -DABSL_ALLOCATOR_NOTHROW=1 -DU_USING_ICU_NAMESPACE=0 -DU_ENABLE_DYLOAD=0 -DUSE_CHROMIUM_ICU=1 -DU_ENABLE_TRACING=1 -DU_ENABLE_RESOURCE_TRACING=0 -DU_STATIC_IMPLEMENTATION -DICU_UTIL_DATA_IMPL=ICU_UTIL_DATA_STATIC -I../.. -Igen -I../../buildtools/third_party/libc++ -I../../v8 -I../../v8/include -Igen/v8 -I../../third_party/abseil-cpp -Igen/v8/include -I../../third_party/icu/source/common -I../../third_party/icu/source/i18n -I../../third_party/fp16/src/include -I../../third_party/fast_float/src/include -I../../third_party/zlib -Wall -Wextra -Wimplicit-fallthrough -Wextra-semi -Wunreachable-code-aggressive -Wthread-safety -Wgnu -Wno-gnu-anonymous-struct -Wno-gnu-conditional-omitted-operand -Wno-gnu-include-next -Wno-gnu-label-as-value -Wno-gnu-redeclared-enum -Wno-gnu-statement-expression -Wno-gnu-zero-variadic-macro-arguments -Wno-zero-length-array -Wunguarded-availability -Wno-missing-field-initializers -Wno-unused-parameter -Wno-psabi -Wloop-analysis -Wno-unneeded-internal-declaration -Wno-cast-function-type -Wno-thread-safety-reference-return -Wno-nontrivial-memcall -Wshadow -Werror -fno-delete-null-pointer-checks -fno-ident -fno-strict-aliasing -fstack-protector -fcolor-diagnostics -fmerge-all-constants -fno-sized-deallocation -fcrash-diagnostics-dir=../../tools/clang/crashreports -mllvm -instcombine-lower-dbg-declare=0 -mllvm -split-threshold-for-reg-with-hint=0 -ffp-contract=off -fcomplete-member-pointers --target=arm64-apple-macos -mno-outline -Wno-builtin-macro-redefined -D__DATE__= -D__TIME__= -D__TIMESTAMP__= -ffile-compilation-dir=. -no-canonical-prefixes -fno-omit-frame-pointer -g0 -isysroot ../../../../../../../Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/SDKs/MacOSX15.1.sdk -mmacos-version-min=11.0 -fvisibility=hidden -Wheader-hygiene -Wstring-conversion -Wtautological-overlap-compare -Wunreachable-code -Wno-shadow -Wctad-maybe-unsupported -Wno-invalid-offsetof -Wshorten-64-to-32 -Wmissing-field-initializers -O3 -fno-math-errno -Wexit-time-destructors -Wno-invalid-offsetof -Wenum-compare-conditional -Wno-c++11-narrowing-const-reference -Wno-missing-template-arg-list-after-template-kw -Wno-dangling-assignment-gsl -std=c++20 -Wno-trigraphs -fno-exceptions -fno-rtti -nostdinc++ -isystem../../third_party/libc++/src/include -isystem../../third_party/libc++abi/src/include -fvisibility-inlines-hidden -c ../../v8/src/codegen/interface-descriptors.cc -o obj/v8/v8_base_without_compiler/interface-descriptors.o |

There were many differences in the flags used but there was one in particular that stood out: -fvisibility=hidden was missing from Node.js’s official builds. V8 already marks all its public APIs properly with visibility macros, so it should be built with all the symbols hidden by default, and only the symbols marked as public should be exposed. So I tried tweaking the GYP configurations to add -fvisibility=hidden to the compiler flags on macOS and compiled again - the resulting build turned out to be even faster than the one before the regression! (In retrospect, I should’ve tried -fvisibility-inlines-hidden too, but it was not exactly straight-forward with GYP and I was lazy :P).

Breaking it down

It appears on macOS, when a static library is compiled without -fvisibility=hidden, its C++ template instantiations could lead to leftover weak symbols that are resolved and bound at runtime, even though intuitively a C++ programmer might assume that templates in a statically-linked library are supposed to be resolved at link time (or at least, it appears so with GCC + ld on Linux). Technically there’s nothing wrong with this behavior as the C++ standard didn’t mention anything about how the one definition rule (ODR) should be implemented, and using weak symbols to implement ODR seems to be a popular technique, though somehow other toolchains could avoid leaking this implementation detail of C++ templates to runtime symbol resolution, but clang + ld/lld on macOS couldn’t.

During the V8 upgrade that led to this visible regression, a huge amount of C++ template instantiations were added (they came from generated C++ source files), leading to an explosion of weak symbols that would be resolved at runtime (1000+ to 7000+). Previously, Node.js was already suffering some overhead from this due to the insufficient visibility settings, but this explosion of weak symbols really made it show. That also explains why fixing the visibility setting made the build faster than before the regression, and why the regression worsened as Node.js continued to upgrade V8 with more and more C++ template instantiations.

Final notes

I sent a PR to enable -fvisibility=hidden when building V8 in Node.js on macOS. The nightly builds already showed the difference:

1 | $ hyperfine "/Users/joyee/.nvs/nightly/24.0.0-nightly20241219756077867b/arm64/bin/node --version" "/Users/joyee/.nvs/nightly/24.0.0-nightly202412188253290d60/arm64/bin/node --version" --warmup 10 |

Locally this also shed off ~10MB off the binary:

1 | $ ls -lah out/Release/node |

Though the size reduction does not yet surface in the nightly builds, possibly due to the fact that the release infrastructure still ran on a very old version of macOS with a very old toolchain. Maybe it will surface in the releases when the macOS upgrade of the Jenkins CI is finally complete.

There are more to be done - e.g. enabling -fvisibility-inlines-hidden (this requires some extra tweaking due to GYP quirks) and enabling it on other platforms (while this does not speed up anything on Linux, it helps making the build smaller). Though at the time of writing, changing compiler options has the unfortunate consequence of invalidating the ccache in Node.js’s on-premise Jenkins CI and making the pull request testing jobs queue up, so I have been deferred the work to some later time when the CI is less busy.

Some other parts of Node.js should also be compiled with visibility being hidden by default, too, but I am afraid other headers that Node.js exposes have not yet marked the public symbols explicitly enough. Without a careful vetting of these public headers, setting the visibility to hidden by default could break the addons in the wild, so I’ll leave that to a future exercise.